Introduction

But who are these powerful organizations who can, with a slight exaggeration, put institutes with high expectations in quotation marks and raise new, lesser-known ones to the top? Also, it would be worth examining what forms of university knowledge utilization and participation in innovation processes as input are recognized by the evaluators, if they are taken into account at all. In our two-part article, we try to investigate these questions.

In the following, we examine the three international rankings with the greatest reputation and thus the most significant influence, while in the next section, we review the other international, regional and national university rankings without claiming to be complete, and draw on the experience of the investigation.

The “Ranking Masterpieces”

- THE – Times Higher Education World University Rankings

- QS – QS World University Rankings

- ARWU – Academic Ranking of World Universities (Shanghai Ranking)

The palette is widened by the fact that an evaluation organization typically issues several evaluations. These are mostly mutations of the general global ranking that can be understood as a central flagship, so to speak, in the form of focused rankings supplemented with various evaluation factor groups. We are familiar with sustainability and green university rankings, registers according to welfare and gender discrimination, but according to the nature of the training, we can find a specific MBA training ranking, a master’s degree in economics ranking, university rankings that offer the possibility of obtaining a degree online, but there is also a ranking published for secondary and even basic level educational and preparatory institutions.

Top 3 international rankings

Times Higher Education World University Rankings (THE)

The Times Higher Education World University Rankings’ own history began in 2010, after the THE-QS World University Rankings, published annually since 2004, in cooperation with the other British player on the most famous triple list, Quacquarelli Symonds (QS), which was founded in 1990 and primarily deals with the measurement of higher education institutions, was brought to a better end, as their publishers envisioned continuing on separate paths. The THE publishes its annual ranking first in collaboration with the media conglomerate Thomson Reuters, and then, from 2014, with the Dutch publisher dealing with the organization of scientific publication activities, which registers such well-known publications as The Lancet or Cell, and operates the ScienceDirect website.

The THE rankings are linked around five groups of performance indicators, namely (1) the teaching and learning environment, (2) research, including its volume, income and recognition, (3) citations, i.e. the professional recognition of research, (4) international integration in terms of lecturers, students and research collaborations, and (5) along the dimensions of industrial income. [1]

Because (3) citations and publications jointly noted by the industrial-academic sector, as a metric, belongs to the most common metric, which measures the utilization of research resources and certain, even personal, insular germs of industrial cooperation. At the same time, the indicators embodied in the references to these research results do not yet directly point to the transfer of university knowledge to business utilization, since they originate from the early stage of innovation, the research stage.

The research dimension (2) is another very divisive and controversial indicator, since even the amounts listed in the research income line do not cover the value of the research service orders used by the actual market, but include all sources of research financing and research support. That is, state funding, international and European research resources and grants, awarded project funding, etc.

Although all of these are necessary for the continuation and maintenance of high-level research activities, they do not correlate in any way with the market utilization of the research results created at the university. Although the value measurement dimension of industrial income (5) gives an account of a certain preference of the industry as to which university, and typically within which researcher the trust capital appears, on the one hand this may still cover an early phase research – aimed at exploring the applied research possibilities of basic research results – which even contains only a trace hint that the results may one day appear on the market in the form of a product, and on the other hand the placement of industrial research within the walls of higher education we can partly see it as an outsourcing activity, an opportunity for capacity expansion, channeling new ideas to the industrial player, a sort of professional pre-care for the maintenance of HR capacity and the way of selecting future new colleagues, and last but not least, task outsourcing/investment that can also be interpreted in the dimension of social responsibility. Not to mention that the local legislation also honors this type of cooperation with a significant tax discount, as a result of which e.g. in our country, companies are better off than not entering into cooperation with universities, because they can reduce their tax base by up to three times the amount spent on this type of cooperation. It should also be mentioned that the dimension of industrial income (5) is only taken into account with a weight of only 2.5% during the establishment of the order. The indicator system of the Times Higher Education World University Ranking, including the weight of the industrial income metric, can be viewed by clicking on this link.

Thus, the above indicators are essentially unsuitable for mapping and characterizing the research utilization dimension, although as we will see later, due to the (5) industrial income dimension, we can still speak of a more progressive ranking with innovation, as the market utilization of research results.

The situation is somewhat further shaded by the fact that, since 2019, the THE ranking has also issued a number of specific, so-called “impact rankings”, one of which focuses on innovation, university infrastructure and corporate relations and compiles the study’s metrics.

According to the available information, university spin-offs are, for the purposes of measurement, registered companies that were created to utilize intellectual property created at the higher education institution. This metric covers spin-off businesses created on or after January 1, 2000, that were founded at least three years ago and are still active. On the basis of the broader methodological guide, it can be established that, from the point of view of the evaluation, any enterprise created for the utilization of university intellectual research results is considered a Spin-off enterprise, regardless of its ownership structure. [3]

QS World University Rankings (Quacquarelli Symonds) [4]

Nevertheless, looking at the index of the “International Research Network” [5] deemed relevant and its Research sub-metric, with the goal of future analysis, we can conclude that they are not related to the utilization of the intellectual creations created by the university, or to the number of established spin-offs.[6]

At the same time, as mentioned earlier, in addition to the flagship World University Ranking, QS also publishes a number of other sub-ranking lists. [7] One of these is the QS Star rating system. [8] [9] It focuses on the 5 areas of the basic “QS Star rating” sequence: academic recognition (teaching), employer recognition (employability), international integration (internationalization), and research or academic development (research OR academic development), of which the university to be evaluated chooses the area to be rated from the latter pair of evaluation circles, research and academic development. [10]

However, even among the sub-rankings of the QS Star ratings, a separate ranking for technology/innovation-intensive research universities can be discovered, in which the number of spin-off companies also participates in the formation of the ranking. [11] According to this, in order to achieve the maximum score, it is necessary that at least 5 such companies have been founded in the last 5 years, and that they already operate without university support at the time of the examination.

However, further information from public sources do not reveal whether there are any restrictions regarding the ownership shares of the utilization company, and a more detailed methodological description does not lead further from the sub-page. It also remains hidden from the interested analyst that e.g. in the case of foundation universities, what is included in the category of “operation free of university support” (e.g. with regard to the forms of financing provided by other corporate interests of the actors sitting on the board of trustees).

Academic Ranking of World Universities (Shanghai Ranking) [12]

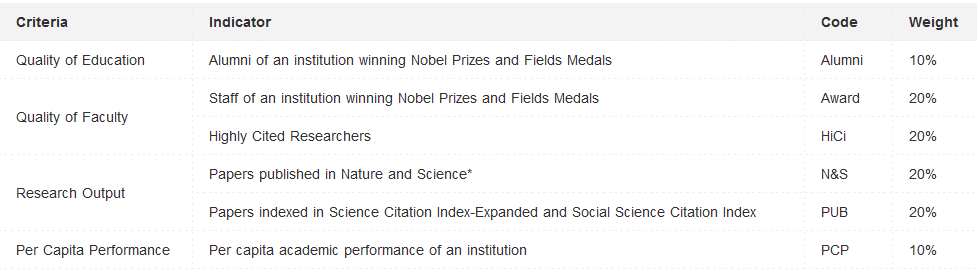

Its evaluation methodology can be said to be very simple, one might even say puritanical, forming a sixth weighted value based on only 5 statistical indicators. The five factors are based on the number of Nobel prize-winning alumni students from the university and institutional employees who have won high-ranking scientific awards (a measure characterizing the quality of education), the number of publications with a significant reference index (a measure for the qualification of majors), the number of publications published in the journal Nature and all indexed by the Science Citation Index (a measure characterizing the effectiveness of research), as well as a measure based on academic performance per capita.

Based on the above, it can be concluded that even though many “self-proclaimed” universities, in the introductory material of the free essay, which is submitted alongside the evaluated scores, mention the channeling of the intellectual creations created at the university into exploiting companies [13] [14] [15] [16] [17] [18] [19], the evaluation parameters do not allow this to be reflected. With respect to the first four out of five indicator areas in the ranking, performance measurement is primarily based on scientific publication and recognition in the field of science, but not on the applied utilization of scientific results.

By reviewing the methodological description, it can be established that the ARWU ranking completely ignores the degree of industrial utilization of the intellectual creations created in the higher education institution, including the number of spin-off companies that utilize university research results. [20]

(See also regional/national rankings in our next article: Greater China Rankings!)

After having the top three reviewed, we will take take a closer look at the other important international, regional and national rankings. Stay tuned!

Further global rankings

U.S. News Best Global Universities Rankings [21]

It is a mixed organization: namely, global, regional and national, as well as an organization that publishes rankings for higher education institutions as well as for the entire elementary and secondary education. Its university ranking publication examines the annual ranking of 2,165 higher education institutions in the USA and 94 other countries based on 13 indicators, and the resulting ranking is now being published for the ninth time.

Through the methodological description [22] , it can be established that the indicators consist of significant publication- and reference-centric metrics, and do not include a feature measuring the industrial utilization of the created intellectual works or the number of spin-off businesses created. Thus, the number of utilization enterprises does not affect the ranking achieved.

CWTS Leiden Ranking [23]

The Leiden ranking is prepared and presented by the Center for Science and Technology Studies (CWTS) of the University of Leiden in the Netherlands. The CWTS Leiden Ranking 2022 is based on the bibliographic data of the Web of Science database prepared by Clarivate Analytics. The system is primarily strong in the presentation of data. By looking at the data along two variables, digital infographics can easily be created from them.

Although the university does not publish a detailed methodological description, it can be read by studying and interpreting the explanation of the indicator list that it may be relevant along the lines of the value indicator of collaboration indicators/industrial collaboration, but since the examination is limited to publications jointly countersigned by universities and industry actors, the number of utilization companies is irrelevant in terms of the ranking. [24]

SCImago Institutional Rankings [25]

Higher education ranking regularly published by Spanish universities, educational institutions and organizations since 2009. It is worth mentioning that the same committee not only rates higher education institutions, but also state organizations, non-profits, and even market industry players.

Its evaluation criteria are among the more complex, as the innovation dimension also appears through the grouping of 17 metrics along 3 applied dimensions (social factor, research factor, innovation factor), and at the same time, publication results are strongly emphasized in all three applied dimensions.

National/regional rankings

America

The Carnegie Classification of Institutions of Higher Education [26]

A ranking limited to US universities (including research-intensive doctoral institutions) created by the Carnegie Foundation for the Advancement of Teaching and the American Council on Education (ACE). After reviewing their extensive and detailed methodological descriptions and database publications, we can come to the conclusion that the utilization of university research results in the form of spin-offs does not influence the assessment of the university ranking to any extent. [27] [28] [29] [30]

The Center for Measuring University Performance (MUP) [31]

A system created by The Center for Measuring University Performance, UMass Amherst, Amherst, MA, and University of Florida, Gainesville, FL, used exclusively to rank US research universities. It has been publishing its annual report since 2000, its last report for the 2020 period is available. Reviewing the list of indicators [32] published by the evaluator, it can be concluded that the utilization of the knowledge created at the university in the context of a spin-off enterprise is not the subject of the measurement, so it does not affect the classification either.

Asia

Greater China Rankings [33]

The rating organization that publishes ARWU publishes two Chinese university rankings, the Ranking of Top Universities in Greater China from 2011 and the Best Chinese Universities Ranking (Mainland China edition) from 2015. Similar to the ARWU, they are also ranked using indicators of puritanical simplicity, as follows:

Europe

The Guardian University Guide [34]

The ranking includes only British higher education institutions. Its methodology focuses on student satisfaction and labor market performance prospects, and it uses an evaluation system for its calculations that completely ignores the research factor and thus the research utilization factor.

CHE Ranking of German Universities [35]

The German national university ranking published by the German national weekly newspaper Die Zeit [36] and compiled by the German Center for Higher Education (CHE) is a ranking that focuses its examination on university studies, education, equipment, research, and student opinions regarding study conditions. [37] Looking at the indicators that define the dimensions of the evaluation, it can be concluded that the evaluation factors do not include the criterion of the utilization of scientific results, they mostly base the determination of the ranking on the publication and citation, also in relation to the projection of the research value measurement. [38]

GovGrant – University spinouts report [39]

Although the British university ranking of the English innovation consulting company is not considered the usual higher education ranking in the traditional sense, it is worth looking into, because it ranks universities specifically based on their spin-out activities. The GovGrant has a much wider scope of investigation than a university ranking – thus significantly different from it, as it analyzes not only the universities, but also down to the level of industry and the spin-off companies themselves, the situation and condition of the national utility companies, the amount of capital investment attracted, their estimated financial value and their ranking. It also obtains its data from a different source, the Beauhurst [40] national and PitchBook [41] international VC databases. For this reason, as well as the lack of public disclosure of the applied methodology, only a limited opinion can be given regarding the definitional questions of the spin-off.

Summary

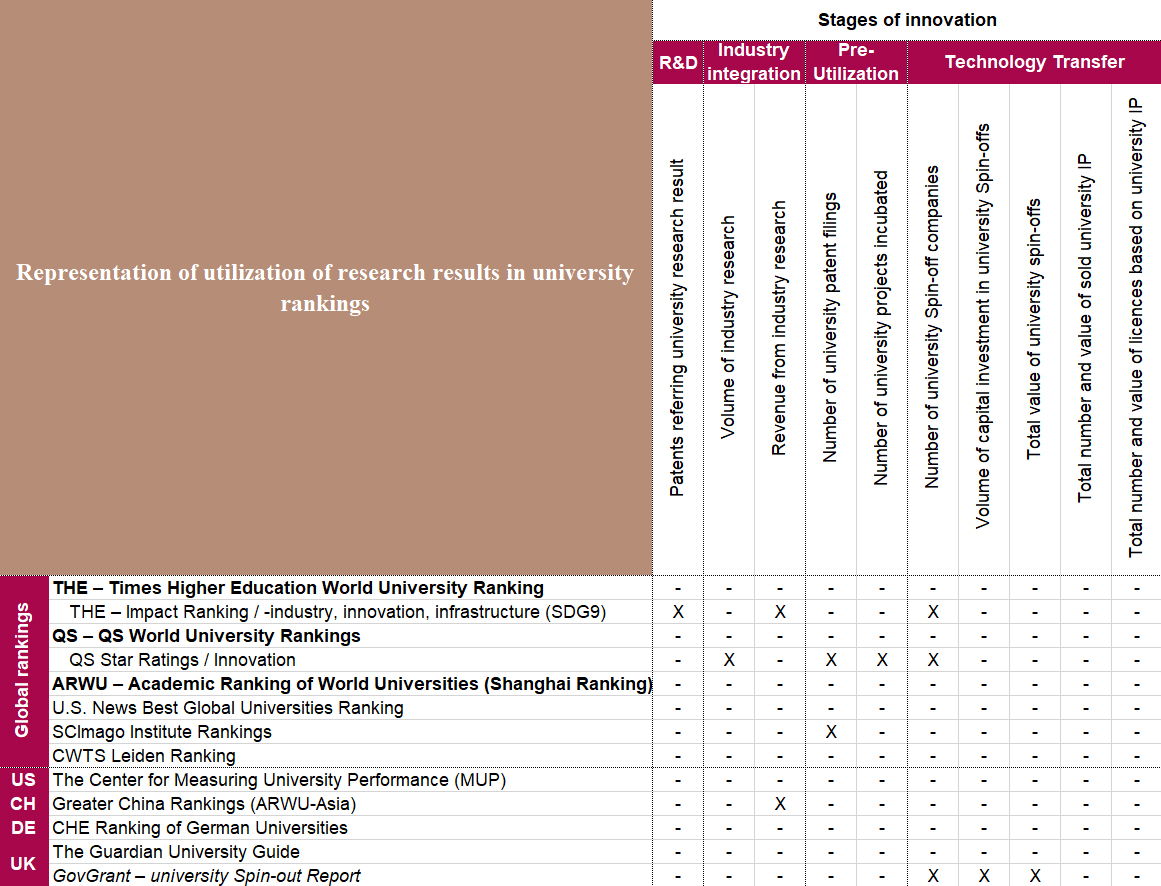

Summary table of the results found by the analysis

In terms of the applied utilization of scientific results, the other global, regional or national university rankings show a similarly neglected picture. The only exceptions to this are the SCImago and the Greater China Rankings, to a negligible extent, since one of them has at least one patent indicator and the other an industry income indicator.

The only refreshing exception is the research of the English GovGrant, which is not considered a traditional university ranking. We hope that the good example will be contagious, and that sooner or later there will be a shift in the dedicated university rankings in the direction of measuring the industrial utilization of research results.

With this, we have come to the end of our study of measuring the utilization of university research results and their inclusion in university rankings, but it is time to take a look at the topic in terms of the goals and tools of knowledge utilization in a somewhat broader context.

Though there are different models in the higher education, including that of the highly R&D- and industry-cooperation-focused ones, and the ones putting their weight more in traditional knowledge transfer, no one disputes that higher education institutions have a prominent role in knowledge creation, research and development, and innovation fostering. For this reason, the effective integration of the R&D potential of universities into innovation systems is of enormous importance in national, European, and global scales as well. The competitiveness of a region or country can be significantly influenced by the degree to which the development potential of higher education and the channeling of scientific research results into industrial utilization are successful.

In line with the “ever changing model-role” of higher education organization, it might be discussed in more detail in the future, whether the importance of research output was considered up to the level of their importance, and if adequate KPIs were scrutinized and weighted right by those ranking systems. I have no doubt, it is an interesting question and initiative to be raised.

[1] https://www.timeshighereducation.com/world-university-rankings/world-university-rankings-2023-methodology

[2] https://www.timeshighereducation.com/impact-rankings-2022-industry-innovation-and-infrastructure-sdg-9-methodology

[3] https://the-impact-report.s3.eu-west-1.amazonaws.com/Impact+2022/THE.ImpactRankings.METHODOLOGY.2022_v1.3.pdf

[4] https://support.qs.com/hc/en-gb/articles/4405955370898-QS-World-University-Rankings

[5] https://support.qs.com/hc/en-gb/articles/360021865579

[6] https://support.qs.com/hc/en-gb/sections/360005689220-Methods?page=1#articles

[7] https://www.topuniversities.com/university-rankings?qs_qp=topnav

[8] https://www.topuniversities.com/qs-stars

[9] https://www.topuniversities.com/qs-stars/home?qs_qp=topnav

[10] https://content.qs.com/qsiu/FAQ_leaflet.pdf

[11] https://www.topuniversities.com/qs-stars/rating-universities-innovation-qs-stars

[12] https://www.shanghairanking.com/rankings/arwu/2022

[13] http://www.shanghairanking.com/institution/university-of-liege

[14] http://www.shanghairanking.com/institution/ku-leuven

[15] https://www.shanghairanking.com/institution/johannes-kepler-university-linz

[16] https://www.shanghairanking.com/institution/eth-zurich

[17] http://www.shanghairanking.com/institution/university-of-twente

[18] https://www.shanghairanking.com/institution/university-of-salerno

[19] http://www.shanghairanking.com/institution/masaryk-university

[20] https://www.shanghairanking.com/methodology/arwu/2022

[21] https://www.usnews.com/education/best-global-universities

[22] https://www.usnews.com/education/best-global-universities/articles/methodology

[23] https://www.leidenranking.com/

[24] https://www.leidenranking.com/information/indicators#collaboration-indicators

[25] https://www.scimagoir.com/rankings.php?sector=Higher%20educ.

[26] https://carnegieclassifications.acenet.edu/

[27] https://carnegieclassifications.acenet.edu/downloads.php

[28] https://carnegieclassifications.acenet.edu/downloads/CCIHE2021_Research_Activity_Index_Method.pdf

[29] carnegieclassifications.iu.edu/downloads/CCIHE2021-PublicData.xlsx

[30] https://nces.ed.gov/pubs2018/2018195.pdf

[31] https://mup.umass.edu/sites/default/files/annual_report_2020.pdf

[32] https://mup.umass.edu/University-Data

[33] https://www.shanghairanking.com/methodology/arwu/2022

[34] https://www.theguardian.com/education/2022/sep/24/methodology-behind-the-guardian-university-guide-2023

[35] https://methodik.che-ranking.de/indikatoren/baustein-forschung/

[36] https://ranking.zeit.de/che/de/?wt_zmc=fix.int.zonaudev.alias.alias.zeitde.alias.che-ranking.x&utm_medium=fix&utm_source=alias_zonaudev_int&utm_campaign=alias&utm_content=zeitde_alias_che-ranking_x

[37] https://www.che.de/en/ranking-germany/

[38] https://methodik.che-ranking.de/indikatoren/baustein-forschung/

[39] https://www.govgrant.co.uk/university-spinout-report/

[40] https://www.beauhurst.com/

[41] https://pitchbook.com/